Week 3- Exploring Motion Tracking

INTRODUCTION:

Hello! Here's my blog for this quarter's version of New Media Project in digital media. I will be working on the more artistic of the three group projects occurring this quarter, focusing on interactive wearable digital technology. Given my history in costuming and fabrication, as well as theater and performance, I'm eager to help out with this group and make some cool performance art both augmented and defined by the integration of digital media.

THE STORY SO FAR:

INITIAL IDEA:

Our group of 11 people formed through a shared interest in investigating wearable technology. I personally have 8 years of professional theater costuming and fabrication experience, and have worked with integrating very simple lights and electronics in costumes before, so I was very excited by the idea of expanding my skillset in that regard. Vivian was particularly enthusiastic about wearable tech as well, and we somehow ended up with a whole bunch of other people joining us.

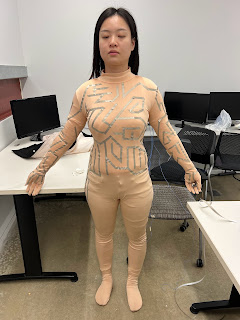

Our initial idea was to create a dance performance between two people wearing touch reactive suits, where the location and intensity of touches they exchanged during the dance would influence the sound of the procedurally generated music to which they were dancing. Harsh touches would result in harsh music, etc. I thought this was a very funny and not particularly realistic idea because it would inevitably result in a dance that was mostly two people standing around hitting each other as hard as possible, which is hilarious in theory but sucks in practice. It also wouldn't be practical, as Yash explained that any touch sensors we incorporate would be ineffective at distinguishing pressure differences and need to operate on a binary "touch" or "not touched" system.

Regardless, we splint in to three sub-groups and spent that first week each investigating existing work in the field of wearable tech sensors, music psychology, and performance art. I personally went searching for examples of dancers already incorporating electronic wearables into their performances, and found the work of Clemence Debaig on her project AUTOMATA, where she built a wearable piece of art consisting of 5 flowers which would open and close as she danced, along with a folding and unfolding shoulder piece, all driven by accelerometers around her arms and legs which controlled servos that caused their independent movement as she danced. I included this in the presentation for last week.

LAST WEEK- Clarifying our idea:

In presenting our findings to Paul, he was quick to emphasize the difference between wearable electronics and digital media, which was sad for me to hear since it indicated I had been barking up the wrong tree, but a necessary distinction that I think put us on a much better path. Paul said we should consider what we want our audience's experience to be for our piece, and that helped me come up with the new idea for the project.

I pitched my new idea to the group: Instead of having two dancers interacting and the audience just standing around and watching, the core of the performance would need to center on the audience interacting with the performer. The exciting element of digital media, after all, is the interactivity, and we needed to get the audience involved. It also made sense to reduce the number of suits we make to just one, since it would significantly decrease the workload and would bring emphasis to interaction between audience and performer, versus between two performers with the audience as static observers. My main idea, that I had to pitch like 4 times for everyone else to start agreeing with me and get on board, was that we should have our "performer" wearing the suit not actually have any choreography or movement set for them- instead, they would serve as an unconventional controller for the music and projection show behind them. Audience members would be invited to touch them, puppeteer them around and dance with them, and their motion and how people touch them would influence the music accompaniment and the visual display on the projector. I'm super excited about this idea and pushed for it for about an hour in order to get everyone else on board, haha.

THIS WEEK- Testing in the Motion Capture Studio:

This week we once again split up into 3 sub-groups to tackle different elements of research for the project: Motion, Touch, and Visuals.

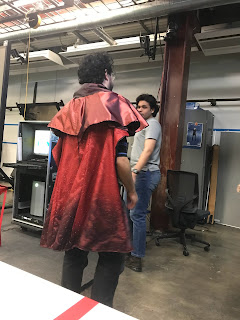

I was part of the motion group, so under the guidance of Lara, we booked the motion capture studio for Friday afternoon and spent about 4 hours in there playing with the system and seeing what it was capable of. As I am not well versed in this technology, I volunteered to wear the markers and be the test subject, spending most of the time walking around the room in circles and waving my arms as requested while Lara and Yash in particular worked the computers. Photographic evidence:

We were trying to decide if using the motion capture room would be the best way to track the motion of the puppet performer, or if using something else like a kinect would serve our purposes just as well or better. Yash went and got a kinect from somewhere, and then spent an hour or two trying to get the kinect and the motion capture rig in shogun running simultaneously. He finally got it working with the contributions of Nick and Lara, while Varun and I served as models and helped experiment with how the kinect tracks bodies and indexes them.

The biggest difficulty with using the kinect would be isolating the movement of just the one puppet performer, and not tracking the motion of other humans puppeteering them or losing the tracking as parts of their body are blocked by participatory audience members. Yash suggested using two kinects simultaneously with one in the front and one in the back, but even in doing that, there would still be the indexing problem. When there's one person in the kinect's field of view, it assigns them an index number and color. When a new person joins, the kinect gives BOTH people a new number and color, not recognizing that one is a sustained singular presence. I'm worried that this number change would make it extremely difficult to isolate information from a singular performer, but Yash would know more than I.

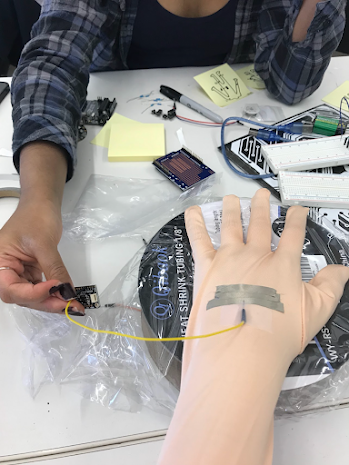

Investigating Sensors

Today (Monday) Emil kindly took me and Varun under his wing and showed us a bunch of the different sensors he has in his office. He has some very interesting slide touch sensors, and he talked about how we should consider what types of touch we want to use (sustained, moving in sequence, a short vs long touch, etc) and to what effect in our design. He also asked how we would be encouraging people to touch our performer, to which I responded that I was imagining potentially embroidering hand prints on the performer's suit to indicate that they should be touched there. He showed us some touch receptive sensors he has and let me test their rigidity and feel so that I can better envision how I might sew them in to the final product. He also pointed me to the thesis of recent graduate Alejandro Lopez which involved tactile sensors within quilting, and walked me through how the electronics were attached to snaps and the importance of not short-circuiting anything. Emil also reminded me of a website he sent to me last spring, Kobakant, which has lots of super interesting and useful information on wearables. Thank you, Father Emil.

I hope this is sufficient progress to show off tomorrow!

Comments

Post a Comment